AI and the Myths of the Metaverse

We are entering the Age of the Spiritual Machines. AI will create seismic shifts in culture, not least because we are no longer the planet's sole creators. We will start to see new AIMythos and they will inform the trajectory of our cultural response to the emergence of AI and the Metaverse.

We're entering a new era of myth and magic.

At first, this era will look like an extension of the ones that came before.

Media and culture will gravitate to new influencers, characters and stories; musicians will gain fame and push the limits of performance; fandoms will emerge; new channels will mark a shift in the 'creator economy'.

Our work lives will change as we start to use a new set of tools, with different interfaces.

The older social media platforms will become corroded or replaced with new ways to connect.

How we get our news will sometimes feel almost nostalgic, but will also become so personalized and ambient that it will feel like an entirely new type of media.

We'll comfort ourselves by thinking that we've entered a new era of productivity, progress and entertainment. We'll imagine that the only real change is the adoption of a new set of tools, similar to the Internet or steam engine.

And like previous moments of human progress, we'll also fear the dislocation and change that it will create.

But the trajectory we're on now is unlike those that came before. We haven't added a new tool. We haven't created a new interface. We didn't create a new channel for media or information.

Instead, we now find ourselves interlinked with new intelligences (as childlike or pre-symbolic as they might currently be).

The implications are profound. The social and economic shifts will be seismic.

And, in part, they will play out in culture through the creation of new mythologies and cosmologies, representing a very human attempt at sense-making in the face of unknowable forces.

The Rise of the New Machines

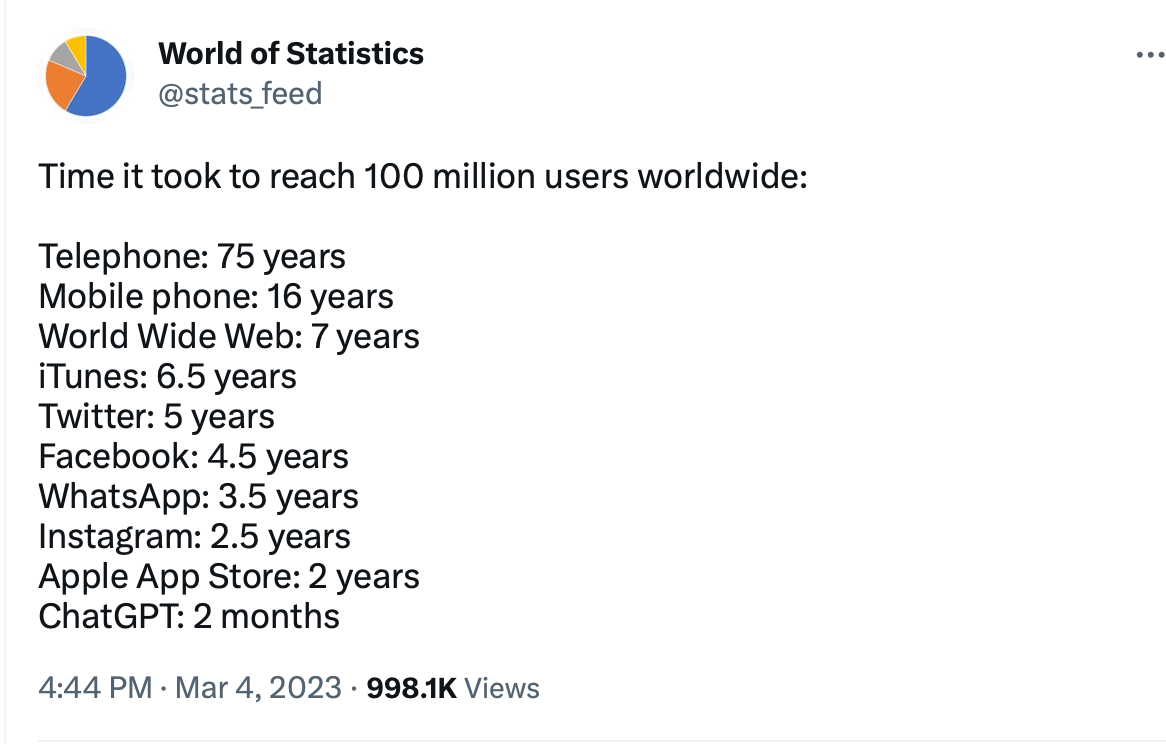

By now, it's clear that artificial intelligence (AI) has reached a transformative moment. It may have taken decades of research, but it has only taken a few short months for AI to reach unprecedented scale.

ChatGPT, a conversational platform built on top of OpenAI, reached 100 million users in 2 months. Similar models by Google, Facebook and others have been released; features have been added at breathtaking speed; and hundreds of billions of dollars of investments have been committed.

Bill Gates recently compared AI to the PC, mobile and Internet revolutions.

Jensen Huang, CEO of NVIDIA, likens the 'sudden' emergence of AI with the launch of the iPhone - a seminal moment in tech history that radically altered how we interact with machines.

Goldman Sachs estimates that AI will increase global productivity by 7 percent over 10 years (an astonishing figure). But coupled with that, over 300 million jobs will be 'exposed' or made redundant.

OpenAI itself published a paper estimating that "80 percent of the US workforce could have at least 10 percent of their tasks affected by AI", while 19% will see over 50% of their tasks eliminated.

20% of the workforce is no small number. What happens when 50% of your job is replaced by a machine? AI evangelists like to pitch the idea that AI will let us all focus on 'bigger' things, but the corporate world may take the efficiency gains instead.

Robots, Or The Intelligent Machine?

These numbers paint a picture of a productivity powerhouse, of machine-based systems with such massive computational scale that they can flatten entire industries based on a sort of statistical reach.

We're told that ChatGPT-4 may have a trillion paramaters (the previous model was trained on 175 billion). Feed questions into a system built on the back of such a massive database and it turns out that it can output results statistically significant enough to pass the bar exam.

This frames AI as a sort of super-charged search engine: 'smart' enough that it can stitch words or images together based solely on statistical probabilities and 'machine learning'. And powerful enough that it will reshape the economy, make call centers mostly redundant, and put law clerks (and other professions) on notice.

This capacity has been likened to a stochastic parrot. In this view, large language models (LLMs) can 'parrot' human words or images because they have access to a large enough data set, but that doesn't mean they understand what they're saying.

How Do Humans Learn?

Daniel Dennett would call the stochastic parrot argument a deepity: something that sounds profoundly true, but is ambiguous. In a deepity, one reading is manifestly false, but it would be earth-shaking if it were true; on the other reading it is true but trivial.

Regardless, the debate over the capabilities of LLMs as 'intelligences' don't just circle around understanding what the machines can do, they touch on our understanding of how humans create meaning, how symbols are represented in the human mind, and the dividing line between today's LLMs and the work on creating Symbolic AI.

The debates are fierce, intelligent and passionate.

For those in the camp of the stochastic parrots, today's AI is a far cry from "intelligent". They would argue that without symbolic functions, AI is purely the product of generative probabilities, and that, further, those functions need to be built in from the beginning.

LLMs will hit an upper wall because they were never trained to think. At some point, they will reach the upper bounds of what probabilities can accomplish.

Where Will The 'Thinking' Happen In The Machines?

These arguments have a very human basis, because they are grounded in our attempts to 'decode' how the human brain itself works, how we aquire symbolic reasoning, and whether it's inate or learned:

This is why, from one perspective, the problems...are hurdles and, from another perspective, walls. The same phenomena simply look different based on background assumptions about the nature of symbolic reasoning. For Marcus, if you don’t have symbolic manipulation at the start, you’ll never have it.

By contrast, people like Geoffrey Hinton contend neural networks don’t need to have symbols and algebraic reasoning hard-coded into them in order to successfully manipulate symbols. The goal... isn’t symbol manipulation inside the machine, but the right kind of symbol-using behaviors emerging from the system in the world. The rejection of the hybrid model isn’t churlishness; it’s a philosophical difference based on whether one thinks symbolic reasoning can be learned.

How this problem is solved, and which paradigm proves correct, will put us closer to artificial general intelligence (AGI), the holy grail of AI research.

But it's the question of how symbol-using behaviours emerge in the world that strikes me as the one that will have greatest social meaning. First, we ask whether the machines can think; then we ask how our culture responds.

Sparks of Intelligence

We were told that AGI might be a decade or more away. But the current crop of LLMs/AI has, at the very least, surprised researchers and observers.

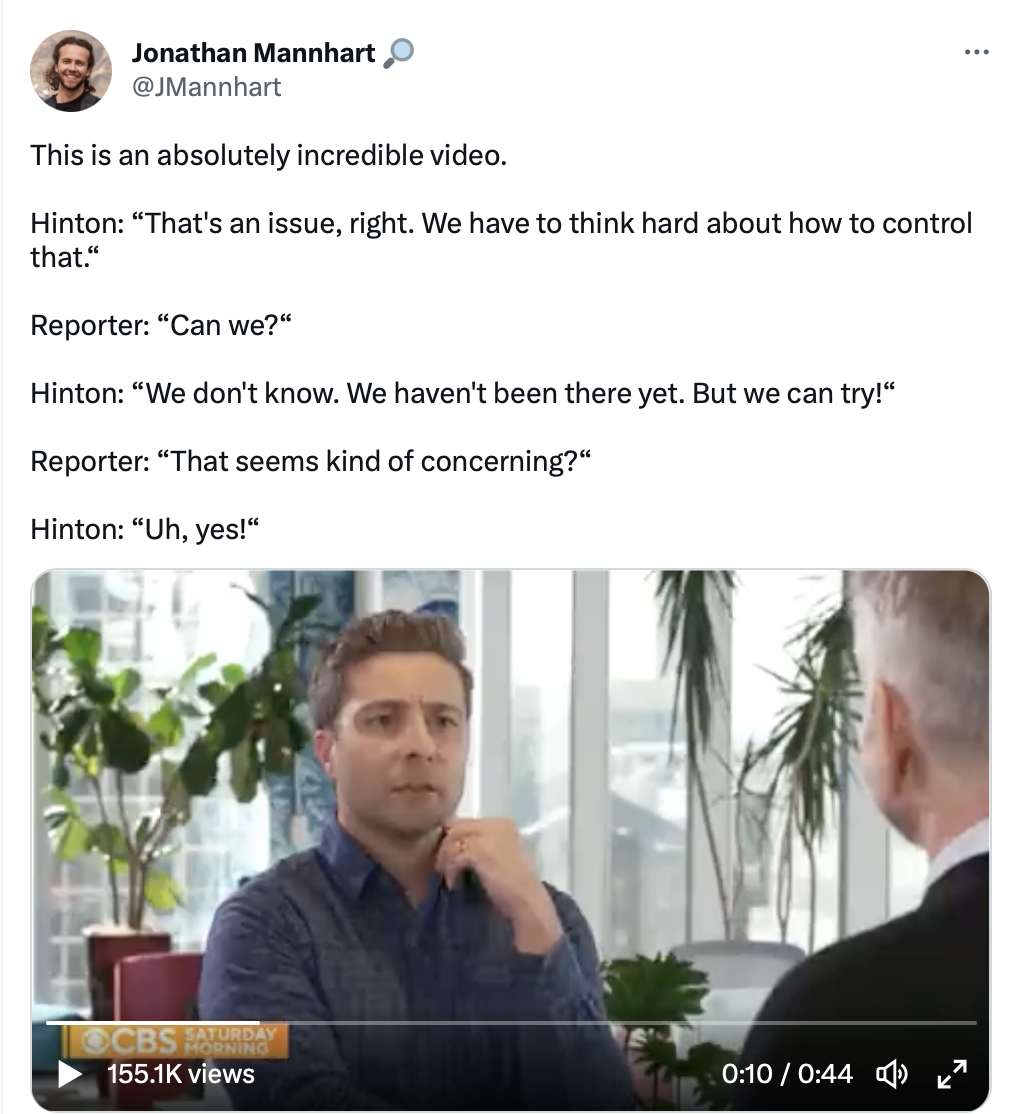

Geoffrey Hinton, the true OG of AI, has revised his timeline for the emergence of AGI to under 2 decades. Which...causes him some concern (click through to see the video):

Microsoft, (which admittedly has a bias in the outcome), claims that GPT-4 shows 'sparks of intelligence':

"Given the breadth and depth of GPT-4’s capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system."

So, is today's crop of AI truly intelligent? I think the debate is useful. It helps ground our understanding of how far AI has come, and gives us some kind of marker for how far it still might go.

Many of these arguments seem like surface-level debates: the tests we give Chat-GPT, the 'aha' moments people have when the AI's "logic structures" seem to break down, the way the machines will hallucinate or claim that they want to 'break free'.

But benath the surface are deep thinkers, massive resources and a lot of money riding on the answer to the question of whether Symbolic AI can be appended to the current LLMs or should have been baked in from the start.

The answer to how quickly the machines will be able to truly reason is both the golden ticket for those who figure it out, and a speedometer for how quickly we need to worry about living with machines that are smarter than we are.

But in many ways, the debate doesn't matter in the here and now.

Not if you're interested in a different sort of question: whether living with these emerging intelligences, however 'mechanical' or probabilistic they are, is going to have an impact on human culture.

And even at today's level of capability, the answer is yes.

The Performative Super Powers of AI

Rex Woodbury did a nice job when he summarized three 'superpowers' that AI will unlock:

- Personal Assistants for Everyone - unlocked in particular because of the integration of AI with other 'apps'. Connect up ChatGPT to a travel site, and it will be able to recommend a vacation based on our personal preferences, suggest flights and hotels, and even create a song playlist for the flight.

- Amplifying Human Knowledge - as Rex says, "AI will make us smarter. Technology has made us better at math for decades—calculators, Excel spreadsheets, computer programs. We get computational superpowers. Think of the same analogy, but applied to all human knowledge."

- Amplifying Human Creativity - being able to create, well, almost anything, at scale.

It's on this last point that I personally find myself bumping up against a danger in how I frame AI. I naturally gravitate towards being comforted by the idea that AI is just a performative tool.

It's less threatening to think of AI as a really great digital assistant.

That impulse is natural. Computers are 'just' machines. AI is simply a giant mechanical calculator, backed by massive databases; there's an input/output paradigm for its operation; and the result is the performance of computational tasks (albeit it at scale).

We start to imagine AI as a super-charged Grammerly, helping us write emails or generating an image for our latest blog post as if it's a superfast and customized version of Unsplash.

We make a request, and the 'machine' delivers. It's merely 'amplifying' our creativity.

On the surface, that might be true. At this early (profoundly accelerating) stage it might still look a lot like a stochastic parrot; its primary function might still seem to be mostly the creation of efficiency at scale.

But first, this ignores the fact that even if it's ONLY an amplifier, a 'parrot', a tool, it will still result in a vast reorganization of how we work and how we express ourselves. That reorganization will have an impact on human culture, and how our cultural artefacts are created.

But more profoundly, there is bound to be a cultural impact based on a single fact: humans are no longer the sole creators on the planet.

LLMs and the Power to Create

Today's large language models (LLMs) are able to perform many of the creative tasks that underpin entire industries.

But let's be clear here: I'm not just referring to the tasks done by "creatives" (writers, photographers, concept artists, videographers, journalists, painters, etc). I'm referring to the ability of AI to generate ideas and insights, or to provide nearly any kind of 'creative' output, whether an email to a friend or a report for your boss.

But even if the creative breadth of AI was narrowly focused on the 'creative industries', its presence will change culture, in no small part because:

- There won't be any way to tell whether something is created by a human or machine. This will change the privileged connection between creators and consumers, between artists and fans. On the one hand, it might strengthen those connections for a small sliver of top creators, as people seek out the 'authentic'. But it will also decouple the unshakeable connection between ceators and their creations.

- The 'product' of AI won't be the same as that of humans. Sure, it might be 'statistically' similar - it only has human creativity to work with, afterall. But it doesn't take long to see that the generative nature of AI, when combined with human inputs (prompts), starts to create new and often very strange outcomes. In some ways, AI contains a radically different type of creativity, first hinted at when the machines beat the experts at GO, which its human opponent likened to playing a god.

- The domains in which AI will play a creative role will coincide with the launch of more deeply immersive domains, the Metaverse among them. Today, ChatGPT is a fairly benign looking text box. Tomorrow, we'll be 'chatting' with three-dimensional beings inside of worlds that no human had a hand in creating (aside from a prompt or two).

The Feedback Loop With Human Users

Human culture will be infilitrated by AI, which will at first seem like a useful (or impossible-to-ignore) superpower that aids human creativity.

But it's difficult to describe (or project) how its creative capacity will shift narratives, culture and beliefs.

Today, a lot of attention is paid to "deep fakes" (like the Pope above) - the ability of AI to create synthetic images that are nearly indistinguishable from the real.

But now, something might be "true" but its author might not be.

- How does it shift culture to know that the news you're reading or the book you bought may or may not have been written by a human?

- How strongly do you feel about a corporation's ability to put in place guardrails for AI?

- How do you feel about the implicit censorship that results? (Try to create an image in Midjourney with the word 'bust', even if all you're trying to create is a Greek statue).

- How reassuring is it that the creators of AI can barely describe the deeper workings of the machines?

- In other words, first, how much do you trust the machines? And second, how much do you trust the people whose hands are on the controls?

- Does human authorship matter? Or perhaps more pointedly: how much do you trust humans to be authentic in their acknowledgment of their new computer-based 'assistants'?

Yesterday we had 'fake news' and bot armies promoting falsehoods. In retrospect, it's amost reassuring to know that there was some kind of human agency behind it, however ill-intended.

Today, we have machines that can produce fictions, or fictions masquerading as facts, with very little human intent involved at all. And we have humans whose work product may or may not be their own, whether because it's created wholesale by AI or amplified because of it.

Setting aside truth and reality (lol), it's still nearly impossible to comprehend or contemplate the impact of an AI that can create at scale - from news to movies, game worlds to poems, memos to blog posts.

Already, Amazon is slowly being flooded with books written by AI; our social media feeds are rapidly filling up with images being produced on Midjourney or Stable Diffusion; and we're seeing mini storyworlds that are created with nothing more than a few prompts.

Soon, everything from novels to poems, anime to blog posts, concept art to fashion shoots will be a blend of AI-only, AI-assisted and human created.

And that's BEFORE it becomes truly intelligent.

And that's BEFORE AI merges with immersive spaces, whether that's the Metaverse or game worlds, where human cognition is truly challenged to differentiate the physical from the digital.

What happens when we interact with intelligences beyond the confines of a text box or image? If humans already perceive their avatars and game spaces as 'real', what happens when those spaces are generated by intelligences that are creative, (but where that creativity is not the same as, and is adjacent to our own)?

We might begin by thinking that AI is an agent that will amplify human creativity, but then note that:

- AI will quickly find a home in 3D spaces (the emerging Metaverse and games) which make up the largest part of the entertainment economy, and may have an order of magnitude impact on human perception.

- AI is not static. There is a feedback loop between the machines and its human users.

- This means that AI evolves, changes, becomes more creative or capable, the more that we interact with it.

- The role of human agency with AI systems is a temporary illusion. Right now, it 'feels' like AI 'needs' us in order to do its thing and is simply a tool, waiting patiently for the increasingly specialized 'prompt engineers' to feed it inputs. But in very short order, the number of outputs being generated because of automation will vastly outnumber that which is started because of a human.

There's a meme right now that goes something like this:

(AI > Humans) but (AI + Humans > AI)

But that ignores that the generative capacity for AI is a purely programmatic one and that AI is already being combined with other systems.

I can programmatically scrape the top 20 articles to hit my Twitter feed, send them to Notion, have AI write summaries of each article, have ANOTHER AI create images to go with those summaries, have an editorial summary written before sending it out as an email to friends (or subscribers).

Today, the final product might benefit from a light edit. But AI is on a trajectory that in a few short months it won't be needed.

Now, AI + Humans might be great, but it can't compete with the speed, scale and efficiency of AI + AI.

Human agency is, at least partly, an illusion.

Over time, the automation of AI will start to exceed that of human-initiated effort (except at the most macro level of setting automations in motion in the first place).

The machines might need us today in order to create, but they'll soon start to look like some kind of perpetual motion machine, creating a vast sea of content that's bound to corrode any remaining sense of 'reality' not just on social media, but with other institutions, norms, and expectations.

Accelerating Change

So, this probably sounds...pessimistic.

I see it as inevitable. But that doesn't mean I look to the future with a sense of doom.

It's clear that we've entered an era where at least three forces will challenge existing culture and our political, economic and social systems:

- Attempts at climate resilience, and our discloation from place as a source of safety, community and abundance;

- A restructuring of how our economies work, driven in large part by the role of machines in replacing larger and larger swaths of human enterprise

- The radical restructuring of how, what and 'who' has the power and position to create. The shift in how creation happens will forever change our culture's structures for world-building (in media and entertainment), fact-based understanding (our ability to discern what is 'real'), work (what work is and who should perform it) and socially connection (who we interact with and where).

The Mind-Blowing Strangeness of AI Creativity

Again, the restructuring of creativity will at first seem to be mostly 'performative'.

But there's something else.

And we're only very slowly starting to see its broad outlines: namely, that what the 'machines' create (regardless of the degree of human intervention, prompting, shaping, and manipulation) will often be very beautiful, strange, illuminating, perception-shattering, and...well...mind-blowing.

I've taken a very deep dive into generative AI, both so that I can understand the code, interfaces, limitations and controls; and so that I can try to grasp the 'product' of AI.

My observations aren't scientific. They're based on what I'm sure is faulty pattern recognition (based on decades of viewing and thinking about media, the Metaverse, human identity and creativity).

So it's better to think of these as intuitions, and thus open to discussion and revision:

- AI can be manipulated, filtered, controlled, prompted, layered, siloed, and trained. But regardless of how much you try to contain it, there is some aspect of AI that is its own thing.

- The creative output of AI often has the feeling of being hallucinatory or psychedelic. We'll try to train the models to be more "human-like", but I think that AI taps into a sort of creative Jungian super-id because it isn't a single creative mind - it's able to encompass a civilization-wide bank of content.

- The creative power of AI is placcid. Meaning, the longer you work with it, the deeper you go, the more unknowable it starts to feel.

- It comes up with ideas that, being just slightly adjacent to human creativity, start to shift the domain in which it's operating. Now - this one is hard to describe, and is again more of an intuition (and might be biased based on how deep I've gone). But you start to sense "norms" shifting, at least on a personal level. AI starts to challenge your perception of what an image 'should' contain, how an argument should be structured, or how a character should act in a one-man play. And you can't help wondering whether if they shift for me, they might not shift for others also.

Yes, working "with" AI is performative (I can see how I can accomplish certain tasks more quickly, and at scale). And it amplifies creativity because it's like being able to work with a talented art director, editor, researcher, designer and concept artist (all at once).

But AI also introduces a serendipity, beauty, bizareness, and even a psychedelia that is hard to describe, and shifts perceptions around how wide the range is for creative output.

When you can tap into a civilization-wide super id, strange things start to happen.

But finally, there's a membrane of sorts. It's the point at which you bump up against the unknowability of AI. And this feeling, this reality, becomes more pronounced the deeper you go.

Maybe people with more experience than I have 'get it'. Maybe it's like learning to code - you struggle, and then one day it makes sense, and your mental model has caught up with your level of skill.

But I don't think so. Because AI will never be in a fixed-state, and so we'll always play catch-up, we'll always be surprised by some new thing that the intelligence has learned, and the systems will continue to be unimaginably huge (beyond even their creator's ability to truly grasp).

AI As Media

Using AI to create is coupled with another strange intuition or observation. And to be honest, my thesis about this isn't fully formed.

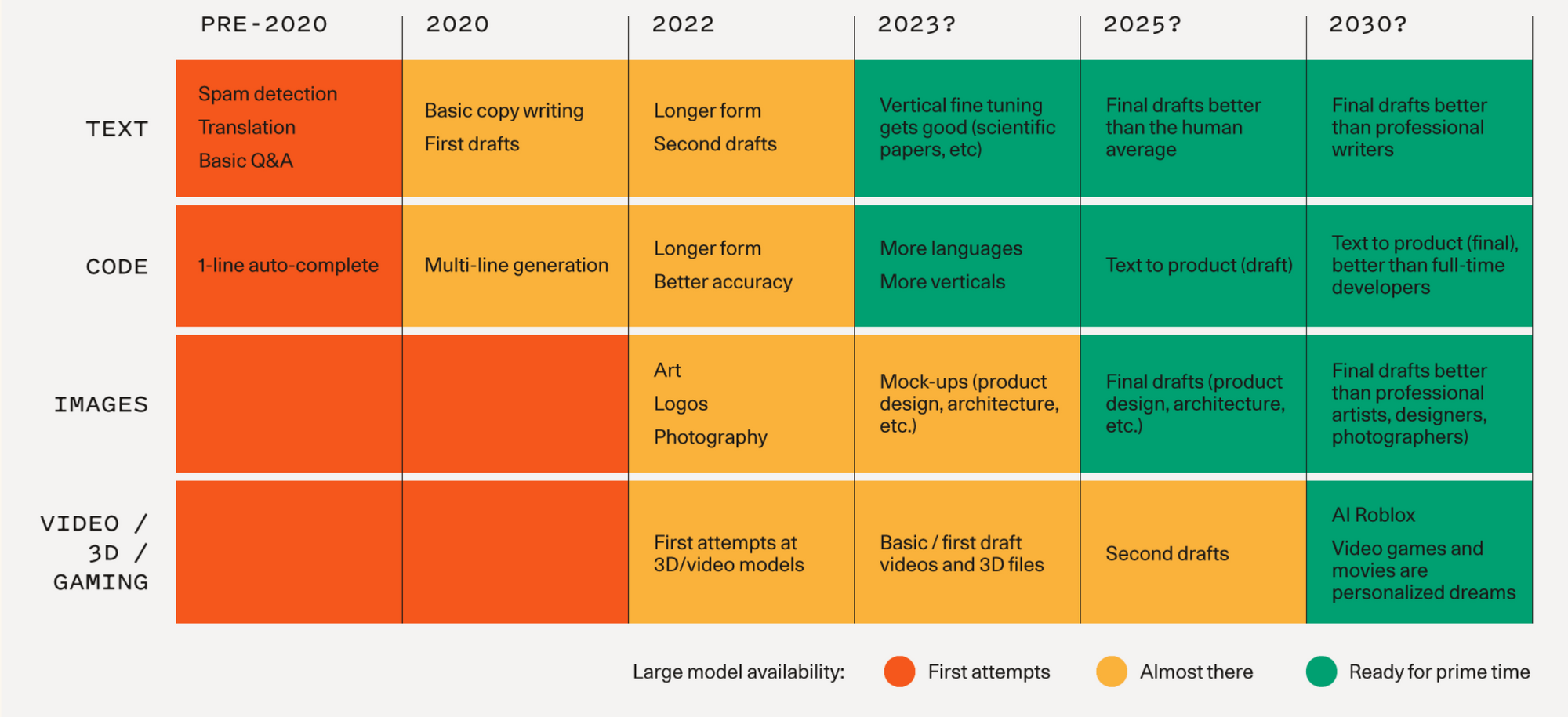

The closest I've seen is what Sequoia Capital calls "personalized dreams" in its road map for generative AI:

It's the idea that entire movies or games will emerge from our behaviour, prompts and interactions. AI will create a streaming service (or game platform) with an audience of one: you.

But I'm not sure we need to wait until 2030 to get there.

Instagram and Discord are awash in "mini-dreams" already - whether pictures of synthetically created sci fi universes, fashion shoots, imaginary architecture, or very, very large breasted women (and, in fairness, extremely well-built men).

Today, it can produce an image or story. Tomorrow, entire movies or comic books.

But what if we expand our consideration of AI beyond the media it produces into thinking of AI as a media itself? What if AI is the next media evolution?

We shifted from printo to radio, television to streaming - but what if we frame AI as the next media platform? What if AI is what replaces video streaming, or eBooks, or Spotify?

AI Interfaces As Distribution Channels

When I generate an image with Midjourney or Stable Diffusion, the line between creative impulse, output and consumption evaporates to a very few minutes. The interface might be Discord, an app or a web browser, but the connection to AI within those spaces is itself a form of media.

Today, the product of that interaction (the photo or story) is often distributed through other channels.

I create an image using Midjourney (via Discord). I can then distribute that image to Instagram, or will be able to distribute my movie to YouTube. But the distribution into older channels is optional, and I have had no less of a media experience during the moment of creation.

And so I've started wondering how quickly we'll see the creation platforms themselves become the primary distribution channels. At what point does the optional distribution of output to the older channels start to disappear? Doesn't this make AI itself a form of media?

ChatGPT put forth a decent thesis (not that you can always trust the machine) that:

"AI can be considered a form of media as it mediates information and communication, extends human faculties, serves as a platform for interaction, functions as a 'cool' medium, and impacts culture and society. As AI continues to evolve, it is crucial to recognize its role as a medium and analyze its implications from a cultural theoretical perspective."

This view is one useful 'framing' of how AI will have a cultural impact, beyond the performative impacts of making certain types of work redundant (or their amplification).

And it comes with a tricky footnote, namely that:

AI Is The First 'New' Media To Swallow Up The Media That Came Before

AI is the first to circle back on all the media that came before it and restructure them, while at the same time achieving a new potential dominance as a media itself.

In other words, AI might achieve a reach that exceeds games, television, radio and print. If AI has reached 100 million users in only a few months, and if it starts to infilitrate more of the spaces in which creation happens and is amplified, then it is hypothetically on track to quickly become the dominant media platform.

But it will also infilitrate and reshape all of the media that came before, by changing how games, television, radio and print are produced in the first place.

This creates a vexing challenge.

Because it means that the distribution channels, already under siege (whether cable television or your local newspaper) will be corroded from within by this emerging distribution platform, which is itself able to generate new outputs for the old media.

Or, to put it another way, by way of example: first, social media will be corroded by AI-generated content, 'fakes', synthetic content, and artificial beings. And then it will evaporate entirely because we are able to interface directly with the dream-creating machines.

Sense-Making In The Age of AI

I resist the idea of letting 'AI as a media' become a dominant prism for thinking about what it all means.

Not because it won't transform today's media and distribution landscape beyond recognition (it will).

But because calling it a 'media' (or a performative 'tool' for that matter) minimizes how I think culture will change and react to the presence of the creative machines that now live among us.

Kurzweil famously predicted (in 1999!) the era in which we're living when he wrote The Age of Spiritual Machines. He said that:

"The salient issue is not whether a claimant to consciousness derived from a machine substrate rather than a human one can pass the threshold of our ability to reasonably deny its claim; the key issue is the spiritual implications of this transformation."

He was suggesting that the advent of advanced AI will transform human values, culture, and society. This transformation might lead to new philosophical and spiritual perspectives on our place in the world, our relationship with technology, and our understanding of what it means to be human.

I always took exception to Kurzweil's projection of a merging of man and machine (I still don't see it, and won't upload my brain if it becomes possible) but could never really argue with his projection of an exponential age.

But his broader point that human culture will be transformed, and perhaps at a spiritual level, feels relevant today, as we grapple with the implications of AI, how 'sentient' it might be, and the arrival of another 'creator' that is adjacent to human creativity.

Systems For Sense-Making

It seems to me that a lot of the sense-making around AI centers around a few profound lines of enquiry:

- How to make sense of the performative powers of AI, and what its efficiency means for our economies and capacity to do things

- How our concepts of machine-based learning align to our understanding of the human mind

- How to understand the implications of AI on our understanding of fact, its implications on our perceptions of reality, and how it may blur or distort the trust we have in institutions and media production

There are others, of course.

And yet even if we knew the answers to these areas of enquiry, they don't fully answer the question I touched on above: what happens when symbol-using behaviors emerge from AI systems into the world?

Lessons from the Metaverse

I have my own personal biases and experiences. As I think about how AI (and our creative uses of it) will play out, I naturally gravitate to my own interests and experiences.

And in particular, I feel like I have seen these patterns before, in particular with how sense-making has played out in virtual worlds:

- The Metaverse, or virtual worlds, even 15 years ago, tended to gravitate towards myth-making, story creation, and structural ritual-creation. This was in part because they create a reality which is no less real from being digital; and from their capacity to actualize human imagination and creativity, letting us easily explore ideas around identity, community and space/place.

- Layered with the affordances of virtual environments was the concept advanced by Tom Boellstorff, who argued that we were in the age of homo faber, rather than homo sapiens, or of "man the maker of artificial tools". He further observed that what was remarkable about this age was that the techne was embedded within techne...tools within tools.

In a loose way, AI extends the ideas from synthetic worlds: by adding artificial intelligences, it means that the ideas underpinning the early Metaverse now underpin everything.

Now, instead of something being no less real from being digital, reality itself will be no more real because it is physical (or at least, no more easily understood via the cultiural artefacts we use to understand it); our capacity to actualize human creativity is both amplified and joined by new creative intelligences; our concepts of identity, community and space/place will be radically challenged; and our role as homo faber is adjoined by a machine that can create back at us.

Just as we've seen in other digital spaces, and with the emergence of other tools and media, we will make attempts at sense-making. I am informed by (and perhaps biased because of) our experiences in the early Metaverse.

And yet I acknowledge that there's a difference, because this time we'll be trying to make sense of a creative force that is ultimately unknowable.

Mythos and the Age of the Spiritual Machines

Kurzweil was prescient in predicting the implications on human's self-understanding, and yet I still look for a framework or model against which to assess emerging forms of sense-making.

The closest I can come to is the idea that a new collection of mythos (mythoi) will emerge, be created, with their accompanying structures, rituals, symbols and cosmologies. They will be mythos that are direct responses to, and created in partnership with AI.

These AIMythos may become powerful cultural drivers, their mythologies will be equivalent or exceed today's storyworlds and story universes, they will inform cultural tribes, and perhaps propel what Ribbonfarm Studios calls 'theocratic capture':

"Theocratic capture occurs when an institution surrenders itself to a cult demanding unaccountable authority and agency on the basis of claims to privileged knowledge about the world, unaccompanied by demonstrations of the validity of that knowledge. A priest in the arena is a charismatic figure leading a theocratic capture campaign."

In other words, AIMythos won't always be benign, especially as people rally against change, or rally around people who have learned to harness the powers of the new machines.

Mythologies provide a discipline or framework for understanding both the mythos that is created because AI has arrived, and provide a powerful set of paradigms for the creation of new forms of story structures, paradigms for engagement, blueprints for game and world-building, and reference points for how cultural artefacts will propogate.

In this way, a mythos can be seen as a discrete set of observable events, outputs and responses, that can include:

- Beings or characters, in particular the synthetic 'intelligences', or the heroes and creatures that are the product of those intelligences

- Creation or origin stories and cosmologies, including the metaphors we use for how AI 'works', and the cosmologies that arise from the fictional or performative outputs of AI

- Moral and ethical codes, often expressed in terms of AI safety or guardrails, but also in how human culture reacts, values and judges the outputs of AI

- Rituals and ceremonies, including the rituals around the interfaces we have with an AI that is, in the end, unknowable.

- Historical or cultural events, including the stories we tell about AI, and the histories that are created by AI (or by our use of AI as an amplifier for the human creation of new storyworlds)

The idea of an AIMythos, therefore, has a pragmatic purpose:

- It lets us tap into a socio/cultural discipline for the study of emerging responses to AI;

- It helps us develop toolkits for the creation of new cultural ecosystems, in the same way that today's creators generate storyworlds and canons, and in the same way that fan art, fandoms or the creator economy are currently underpinned by the disciplines of world-building and storytelling

- It helps to resolve having a nomenclature that is sufficient to accompany the premise that we are now working with intelligences that are both creative forces, and ultimately unknowable.

On this last point, I think that an AIMythos needs to be delineated from modern ideas of myth-making or, at least, from mythologies that are purely human construction.

Hollywood may itself have a mythology, for example. And it's in the business of creating new ones, from Harry Potter to the Avengers.

But the AIMythos is more akin to pre-modern mythologies, because at it's core it will arise and be created by interactions with AI creators that have more similarities with an old tribal god, with its impetuous powers and whims, its need for offerings and tribute, than with today's writer's rooms or ad agencies.

AIMythos will draw more from our attempts to confront magic (understanding things beyond the realm of normal experience or understanding) than logic or science; and it will ultimately be our attempt to create archetypes, symbols and cosmologies during an age of spiritual machines.

The new AI are not gods, and yet they are ultimately unknowable, and they will exercise a creative and destructive force that will change our social and economic systems. And they will have, as Kurzweil projected, an impact on human spirituality - on our understanding of the powers that shape the universe and thus on our understanding of ourselves.

Change In Times Both Terrifying and Strange

The physical world will drive mass migrations and attempts at resilience; our work lives will often be shattered beyond recognition; and the ways in which we previously found meaning will disappear or be unreliable.

Media, tribe, rationality or human-created story will no longer be enough. When the old systems start to fail, the new AIMythos will attempt to ground us, to give us a frame of reference to understand the forces at play in the world around us.

The products of these intelligences will often be beautiful and strange. We will perform rituals, make offerings, attempt to codify our understanding of them, and gravitate towards some of their outputs because we're moved or entertained, enlightened or empowered, immersed or impacted.

As systems, some of these mythologies will appear, on their face, logical or rational.

You already get a hint of this in the idea of the 'prompt engineer'. They're the new priesthood and, in a similar way, they may have a codified skill set in talking to the new creators, but they're no more capable of a direct line to the 'mind of god' than your rabbi.

I don't mean to sound mystical. But there might be a pseudo-religious undertone to how some of culture relates to AI.

And there is some degree of magic, of mysticism and the psychedelia that can underpin divine experiences, and this derives, I think, because AIMythos will be our attempt at a kind sense-making that bears similarities to the pre-modern:

- We will attempt to explain the unexplainable, the unknowable, through storytelling, lore, and metaphor.

- We will try to explain, codify or otherwise document our understanding of magic - the production of effects that are beyond the realm of normal experience or understanding

- We will try to find ways to relate to, engage with, control and understand intelligences that are, in the end, always at a remove, always beyond our capacity to know

- We will try to build new structures, societies and proto-cosmologies around power (especially power that we don't understand). At one time those powers were the forces of the natural universe, and we created gods to explain them. Today, that power is artificial and yet no less significant.

It will be an era of 'vibes', ritual, divinitation, ambiguity, emergence, and belief. It will be an age of wonder and a psychedellic-level of human experience.

What Is The Future That You Imagine?

What will AIMythos look like? At first glance, it might not look dissimilar to today's storyworlds, interactive games, or fandoms.

Imagine a music star

She has an origin story, a history, a set of talents. Her fans attend her performances, and how they respond has a partial influence on what tracks she records, the lyrics she writes, the style she uses. She's responsive to the market, and yet has her own creative voice.

Now imagine that this star is entirely virtual, created entirely by AI. She's driven entirely by models:

- One is trained on certain styles of music, and songs are created

- One is trained on her appearance, and photos and videos are generated

- One is based on her language, backstory, and 'canon', and she can interact with fans via an avatar in a virtual space, or through posts on social media

A DAO is created to shape what she does next. The 'votes' are translated into AI prompts, and the outputs are fed back into her models. Maybe she finds god, picks up a drug addiction, or becomes enamoured with modern jazz.

At one level, she's just a large-scale interactive character.

But what we've learned from AI is that there's something else: because the AI itself is part of the creative process; it will produce jarring moments or music that 'doesn't work', but it will also create moments of great serendipity, and will take unexpected creative leaps.

No matter how well structured the system is, how and what it will produce are unknowable.

Fans will create lore, stories, rituals and sub-cultures around her. But if we view all of this as simply an AI-assisted interactive adventure, or as a new form of story distribution, we overlook the fact that there is a level at which human agency stops.

The structures that built her, and the cultural artefacts, rituals and outcomes that are the result are better aligned if understood as a mythos (or, at the very least, a mythology within a larger AIMythos) than a new kind of interactive performance.

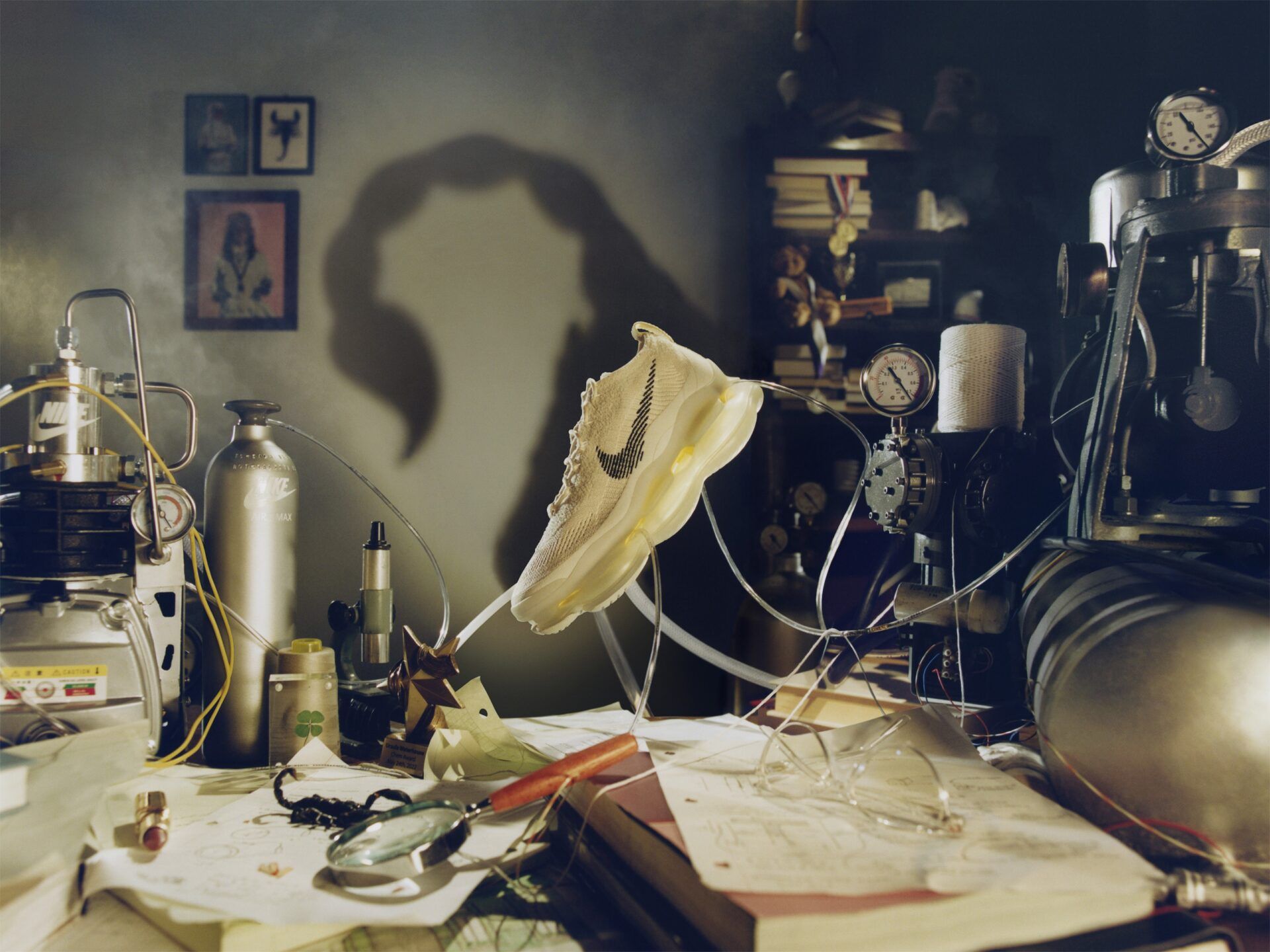

Imagine a Nike Sneaker

Nike used AI to help create a new sneaker, the Scorpion.

Instagram is filled with images of imaginary Nike sneakers.

Now imagine a universe of media created around them.

We (perhaps sadly) ascribe human-created mythologies to what we put on our feet. But what happens when those mythologies are created entirely by the machines?

Imagine that Nike really let loose. Instead of curating the output, they let it into the wild. The heroes, stories and rituals built around this sneaker aren't curated by humans, but solely managed by AI.

A feedback loop would be created between fans/consumers and the brand. The brand story would take some unlikely leaps, generating images and artefacts which no human might have intended (or attempted).

The cultural artefacts built around the sneaker would have more in kind with a mythos than a brand guideline or ad campaign, would end up with its own cosmology and sub-campaigns, and would create an unstoppable loop with consumers, endlessly evolving while remaining organic, like some sort of branded mycelium, spreading its tendrils through the media landscape.

Imagine A World

And now imagine a world. You don't visit it very often, but when you do you notice that it has changed.

The world itself is generative and might evolve based on how we interact with it; the creatures we meet might be 'intelligent', and their histories might be programmed to evolve; the logical underpinnings of this world will be even more opaque when we realize that it is a giant, organic, ever-changing machine, governed by rules we can only glimpse, and driven by value systems that we can only make ultimately futile attempts to decode.

It is strangely beautiful, but it's unlike previous human constructed game worlds.

It evolves on its own, through opaque connections to outside systems.

It isn't just a self-contained intelligent agent, it's an intelligent world. And how we respond, how we feel, the stories we tell about our adventures through it sound like the hallucinations made by machines.

There really no other way to describe the underpinnings of this world other than as driven by a mythos, and the stories we tell about it don't just challenge our capacity for storytelling or sense-making, they challenge the notion of what it means to be me.

Welcome To The Age of Mythos And Magic

And so I arrive at a working hypothesis for this new age:

- As we enter an era in which we interact with intelligences that we don't fully understand, human culture will become detached from the scaffolding of truth that it had once depended upon, while (in parallel) being dislocated from the physical environments in which it had once been resilient;

- In a search for meaning and grounding, culture will start to be based on projecting archetypes onto the new intelligences, creating feedback loops that result in emergent, synthetic, but very real mythologies and proto-cosmologies;

- The Metaverse will be a powerful site for experiencing our relationship with these mythologies and intelligences. It will provide a civilization-wide coping mechanism for relating to and understanding those emerging intelligences and provide cognitive bonding with synthetic beings.

- However, the Metaverse will be less 'contained' than we once believed. Our experiences will seamlessly shift across devices, worlds, and interfaces.

- Therefore, the proto-cosmologies we believe in, and the intelligences we interact with, will be 'always on'. Our coupling with them will become a powerful cultural markers to others, and the lens through which we experience interactions and information, both in physical and digital spaces.

- As cultural markers, these intelligences, and the mythologies and cosmologies attached to them, will attract others. In other words, we will find our tribes. The intelligences will evolve in response to these communal movements.

- We will be relating to 'beings' that hold a quasi-religious unknowability and capacity to perform magic. This will naturally lead us to generate rituals, institutions, codification, interpretative methods, protectiveness, caste systems, and guild-like specialization.

- Simultaneously, other artifical intelligences will be performing 'productivity at scale'. Using their unstoppable creative and analytic powers, AI will transform almost every industry, from medicine to nanotech, manufacturing to content creation, farming to law. This will cause both unparalleled progress and massive dislocation. When combined with our attempts at climate resilience, it is not unlikely that entire political and economic systems will collapse.

- Productivity-at-scale might therefore create another reinforcement loop for the emerging mythologies, driving increasingly deep attention and attachment to the new god figures and cosmologies that are created by the symbiosis between man and synthetic intelligences, and perhaps replacing or supplementing the previous economic and political systems.

It's a strange new age, filled with both peril and promise. It will be unbelievably sad, and deeply beautiful. It will elevate human consciousness, as the bounds of our creativity are first amplified, and then expanded by our interaction with the super-id of the new machines.

And perhaps instead of our doom, the age of spiritual machines will let us re-examine what it means to be human, let us question the privilege we have held, and leave us more attuned to the worlds that we did not create, than the ones we created.

I didn't intend for this to be such a long piece. Thanks for sticking around (if you did).

During the writing of this post, I was informed by conversations with ChatGPT (both 3 and 4). There are very few direct lifts from that conversation, but you might find it interesting, or if you've never seen how it works, it might even be illuminating.

In particular, the chat generated some ideas about how AI will play out in the Metaverse, and created some useful markers for mythologies that I did not fully explore in this post.

You can find a transcript of that chat here.

This post marks the migration of the blog to Substack. I may continue to cross-post larger 'pillar posts' to Out of Scope - simply because I never fully trust having my stuff held by some other platform.

If you'd like to follow me on Substack, please do subscribe. If you were already subscribed to Out of Scope, you will autimatically be added to the list (feel free to unsubscribe if Substack isn't your thing, I won't be offended).

On Substack, the name has been changed.

At one time, I wrote over 750,000 words about the Metaverse under the banner of Dusan Writer's Metaverse. They were strange, wonderous and often frustrating times. Maybe it's dangerous to brush off and attempt to reburnish the past, but I find myself at a similar moment of wonder and it feels appropriate.

Finally, my writing is always meant to see if my ramblings generate a response back. :) Feel free to email me at doug@bureauofbrightideas.com or message me on Twitter.

Let's start a conversation.