Scan Everything: Building the Infrastructure for Augmented Reality

Everything is being scanned. From avocados to Amsterdam, from your living room to the local park, it has become easier than ever to take 3D snapshots of physical things.

Everything is being scanned. From avocados to Amsterdam, from your living room to the local park, it has become easier than ever to take 3D ‘snapshots’ of physical things.

This has profound implications for the next major shift in computing: to one in which spatial awareness, machine learning and new devices combine to radically change, well, everything.

And yet this shift is nearly invisible to those who don’t work in simulated reality ( Apple’s term for XR/AR/VR/AcronymX).

It can even get a bit confusing inside the industry, with so many terms floating around. But Matt Miesnieks makes an important point:

There are dozens of ways to capture reality. And they’re arriving at different resolutions.

LiDAR, Cameras and Scans for Everyone

Some people like to see photos of food, fashion or cute animals in their social feeds. Myself? I like looking at the latest scans. Call me geeky, but I find this way more sexy than a photo of the latest loaf of bread someone baked:

Now, this isn’t that much different, really, than taking a selfie in Snapchat and adding a face filter. Your camera is being used to detect the dimensions of what it sees. Snapchat is simply taking a ‘scan’ of your face, detecting its contours, and the ‘filter’ then attaches effects and elements to this unseen scan.

But with photogrammetry, you can export this scan.

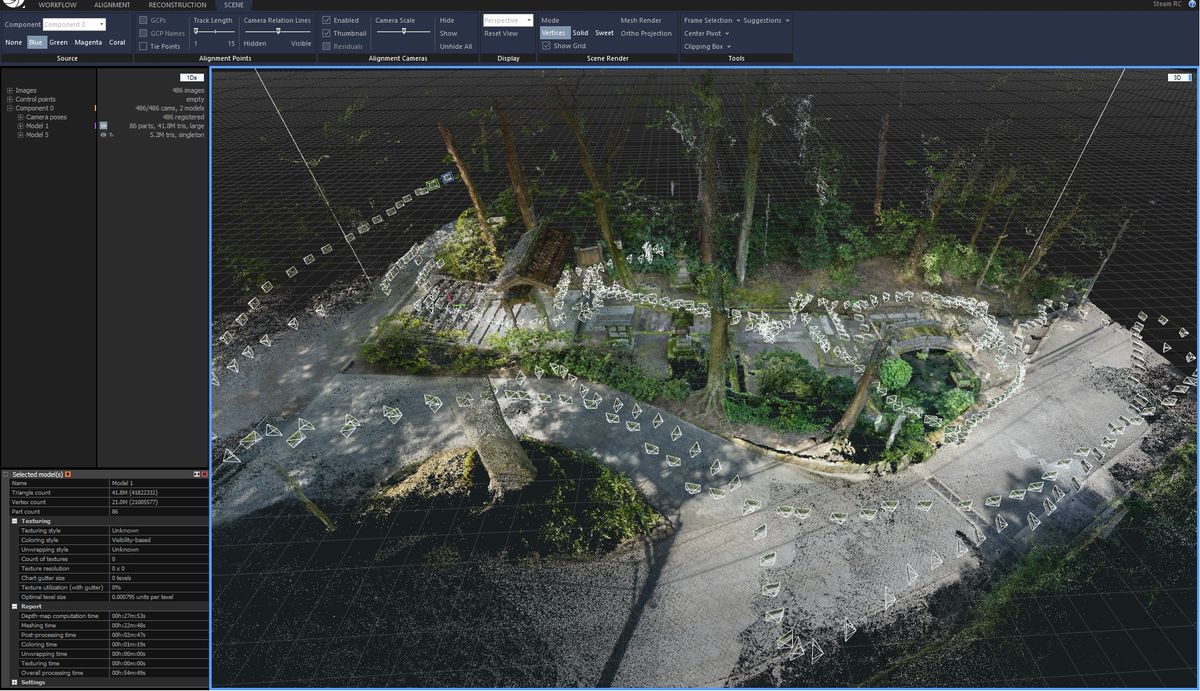

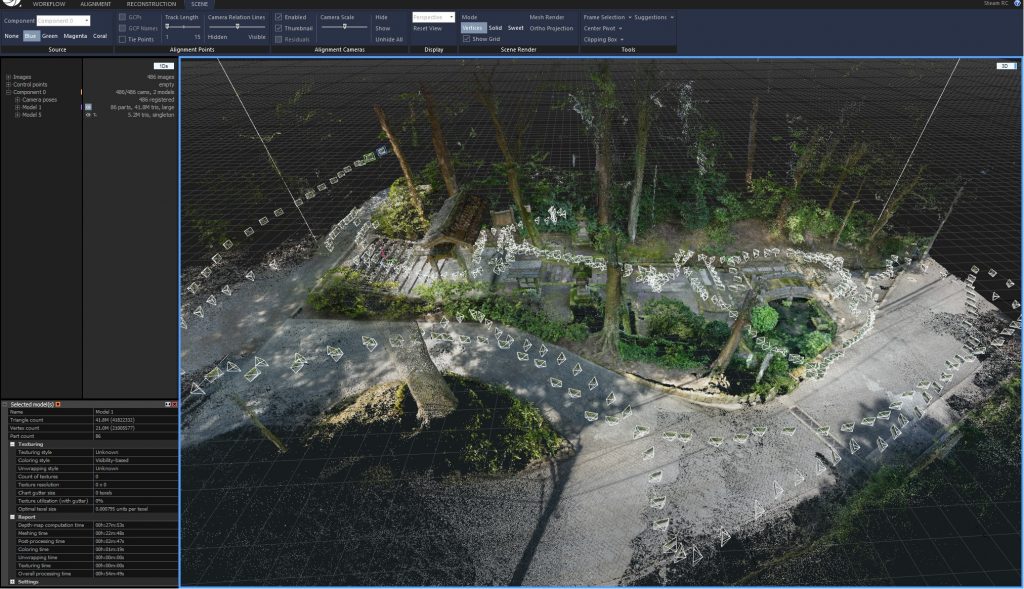

This, for example, isn’t “real”: it’s a scan that uses RealityCapture software to export and use the asset that was created:

The above is a walk-through of an entirely digital ‘twin’ based on interpreting a camera “walk-through’ of a physical space:

Until recently. the camera on your phone was the most powerful way to scan 3D objects, faces and spaces. “Behind” the camera was a lot of computing power. Being able to interpret video and convert it into a 3D digital object using software was a big lift.

Over time, the software became more accessible.

But if you wanted, for example, to scan a whole city, you needed a Google car driving around with a lot of equipment on the roof.

Apple Brings LiDAR to the Masses

When Apple added LiDAR to the iPad Pro, it maybe didn’t give you the ability to easily scan a whole city (there’s a limited range to what the LiDAR can see), but it HAS allowed developers to do some pretty detailed scans of nearby rooms and objects.

Combined with advances to ARKit, the software that developers use to create AR experiences for iOS, you can use the LiDAR to rapidly detect the contours of the world around you:

This will allow for AR experiences that are richer and more ‘realistic’. But combined with machine learning, it will also power a new generation of apps in other categories.

I’m expecting, for example, that with the launch of iOS 14, that there will be an explosion of fitness apps which give you a ‘virtual coach’:

Large Scale Scans and Machine Learning

So, the ability to scan nearby objects and environments is becoming more and more accessible because of advances in how well your phone’s camera can “see”, as well as the addition of LiDAR to devices like the iPad (and the upcoming iPhone).

As we shift our view to a larger scale, the kinds of scans that were once reserved to Google Street View cars or owners of highly specialized (and expensive) equipment are now possible at a lower price.

This has shifted large-scale scanning from being either a niche business or available only to the big players like Apple and Google.

Once a leader in “professional” LiDAR scans, Velodyne now has competition, with companies in the field raising millions of dollars in funding to support hundreds of customers.

We can stitch together multiple scans to create high-resolution digital twins of large spaces that contain a lot of objects:

Pixel8, meanwhile, is creating a “common ground truth” which aligns different inputs to create a digital twin of large-scale spaces.

They took a group of 25 volunteers with phones and were able to combine the data into a large-scale digital twin of Boulder Colorado.

But where THIS gets interesting is the ability to then take that scan and segment it:

Because NOW scans aren’t just 3D models. They’re models with semantic awareness.

Think of it like photos: at one time, a photo was just an object, an image. But now Facebook or Apple knows that the photo is of YOU. That the person standing next to you is Joe, your best friend.

Apple sends you “memories” with titles like “Your fuzzy friends” and gives you a collection of photos of your dog. Static images can now be interpreted and the objects in them detected.

This same awareness is now being carried over to 3D scans.

Even Apple gives you out-of-the-box abilities to detect the objects seen by your camera. And with ARKit, this is extended to “tag” objects like tables or to provide your own models (say, a sculpture in a museum) that can then be detected.

And so not only are we able to scan objects, but we’re increasingly able to ‘understand’ what we’re seeing.

What Does It All Mean?

There’s a pincer movement: city and planetary-scale scanning (which will power everything from self-driving cars to drone deliveries) is becoming a bigger and bigger business,. And smaller-scale scanning is becoming more and more accessible to individual developers.

Scans are being exported and used in game engines to create hyper-realistic immersion. Unreal 5 gives us a hint of just HOW immersive this will be:

Meanwhile, because of the scans that happen in the “background”, and with advances in rendering and AR creation software, we’re starting to see “blends” of the physical and digital that are looking more and more real. The real-time reflections in this scene hint at how ‘real’ AR is starting to look:

Meanwhile, delivering all of this to end users becomes another line of attack. I can scan Main Street, for example, but I still need to deliver that scan to a new visitor if I want to give them a fun/realistic and high-resolution experience.

5G will be critical to the ability to do this rapidly and effectively. It’s why Niantic has formed a Planet-Scale AR Alliance:

All of these efforts point to a new generation of spatially-aware computing. The lines are blurring:

- Digital objects can be created by scanning physical ones, or the physical world can be supplemented with things that don’t really exist

- The real world has a digital twin – one that is increasing in size and resolution because of how many companies are attempting to “map reality”

And this only scratches the surface of what it all means. Because these things are also interactive.

I can control the physical world within a digital twin that is accessed from the other side of the planet. I can click on physical objects and interact with their digital overlays. Objects can shuttle back-and-forth between physical and digital realities.

All of those scans I see, all of the little experiments being conducted – they ARE the breakthrough in AR that will power the next few years. They are also the foundation piece in preparing for a sea-change in computing, one where new devices (like glasses) and ambient computing will, eventually, make activities like visiting your Facebook page seem quaint.