Apple Launches An AR Cloud (Selectively)

Apple has launched an AR Cloud to allow precise location-based augmented reality experiences.

But there’s a caveat: it’s only available in 5 US cities (Miami, LA, San Francisco, Chicago and New York).

But at least Apple has put a stake in the ground: they are now officially an “AR Cloud” company, joining the likes of Google, Niantic and Snapchat.

What Is an AR Cloud?

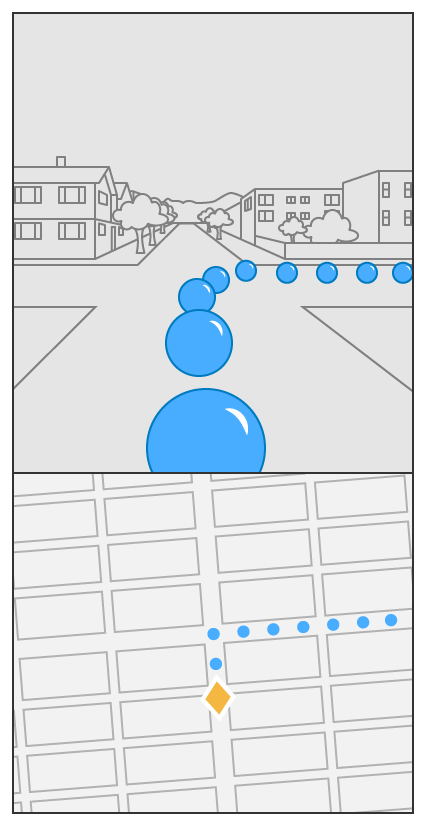

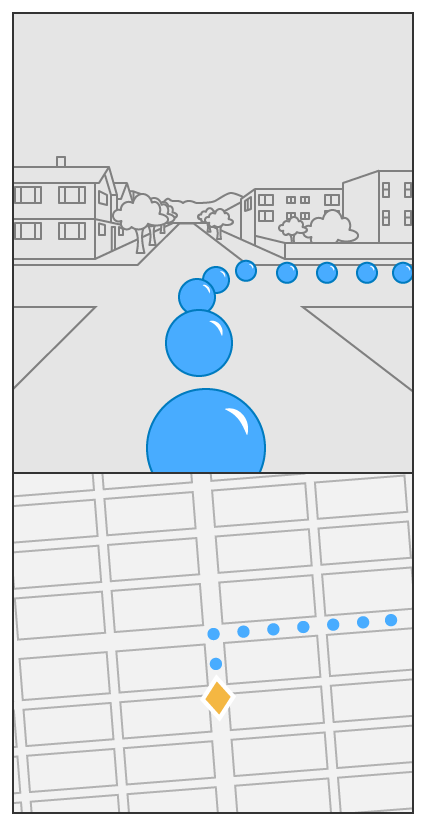

So first, a definition: in order for augmented reality to work really well, the device you use needs to be aware of the world around it.

Think of Pokemon Go: you rotate your camera around a bit until it can ‘place’ a Pokemon. During that time when you’re scanning, your phone is detecting planes and objects and figuring out the best place to put the digital character in the ‘real world’.

But now imagine you’re walking down the street: you want to put direction markers, or have a series of 3D characters who greet you as you walk. How can you scan an entire city block?

The AR Cloud is a database of scans. Those scans can be sent to your phone ahead of your walk through the neighbourhood. Now, your camera doesn’t need to do as much work to figure out where to place the AR content.

Now, these scans aren’t just photos or maps. They’re highly detailed ‘point clouds’ derived from a variety of sources.

Pixel8, which does crowd-sourced spatial mapping, gives a good visual example of what an AR cloud “looks like”:

Using Apple’s AR Cloud

At WWDC, Apple has launched the ability to track geographic locations in AR.

They even provided a nice tutorial showing how it’s done (emphasis added):

“Geo-tracking configuration combines GPS, the device’s compass, and world-tracking features to enable back-camera AR experiences to track specific geographic locations. By giving ARKit a latitude and longitude (and optionally, altitude), an app declares interest in a specific location on the map.

ARKit tracks this location in the form of a location anchor, which an app can refer to in an AR experience. ARKit provides the location anchor’s coordinates with respect to the scene, which allows an app to render virtual content at its real-world location or trigger other interactions. For example, when the user approaches a location anchor, an app reveals a virtual signpost that explains a historic event that occurred there. Or, an app might render a virtual anchor in a series of location anchors that connect, to form a street route.“

The code works by downloading localization data (the spatial ‘maps’ of the location):

“To place location anchors with precision, geo tracking requires a better understanding of the user’s geographic location than is possible with GPS alone. Based on a particular GPS coordinate, ARKit downloads batches of imagery that depict the physical environment in that area and assist the session with determining the user’s precise geographic location.

This localization imagery captures the view mostly from public streets and routes accessible by car. As a result, geo tracking doesn’t support areas within the city that are gated or accessible only to pedestrians, as ARKit lacks localization imagery there.”

The Source of Apple’s “Cloud”

But the catch to all of this is that it’s available for a limited number of locations.

The reason? Most likely the data is coming from Apple Look Around, which is based in part on their purchase of a company called C3 Technologies:

C3 Technologies creates incredibly high-quality and detailed 3D maps with virtually no input from humans. The 3D mapping is camera based and the technology picks up buildings, homes, and even smaller objects like trees. C3’s solution comes from declassified missile targeting methods.

Sean Gorman, of Pixel8, figures that capturing this data is expensive:

And so it perhaps makes sense for Apple to derive two values from mapping a city: first for Apple Maps and second for AR.

They specify the availability of this data for ARKit:

“This localization imagery captures the view mostly from public streets and routes accessible by car. As a result, geo tracking doesn’t support areas within the city that are gated or accessible only to pedestrians, as ARKit lacks localization imagery there.

Because localization imagery depicts specific regions of the world, geo tracking only supports areas from which Apple has collected this localization imagery in advance. The current cities and areas are:

- San Francisco Bay Area

- Los Angeles

- New York

- Chicago

- Miami

With Apple Glass still a year or two (or three) away, they won’t rush to allow localized AR experiences outdoors everywhere. Instead, they’ll be able to see what developers do with it in these early iterations, for a few select cities.

Which leaves an opening for Niantic, for crowdsourced solutions like Pixel8, and of course for Google – which is still leaps and bounds ahead of Apple when it comes to mapping the physical world.