Snapchat Is The World's Leading Augmented Reality Company

For this week at least, Snapchat is the world’s leading augmented reality (AR) company. They’ve taken top position because they’ve tackled the four pillars of AR.

The Snapchat Partner Summit was a rocket ship. It catapulted them from being an ‘app company’ to a full-blown AR platform.

And in so doing, they’ll help raise the bar for everyone else.

In a single sweep, they’ve managed to elevate the ‘AR Cloud’ to something we can all wrap our heads around. They helped explain that AR isn’t just IKEA couches and mixed reality Lego. And they’ve made clear that they have an army of “AR developers”, with over a million experiences developed and shared.

Now, this might all change in a week.

Apple will hold its developer conference and will put its own stakes in the ground for the future of augmented realities.

But as we watch Apple, it’s worth evaluating how well they do in tackling the four pillars of AR:

Pillar One: AR Glasses

The battle is for the space in front of your eyes. The winners (and there will be many) for the real estate on the bridge of your nose may win the future of computing.

Qualcomm estimates that within 5-10 years, glasses will be untethered and unpaired. Meaning, everything you can do on your phone you’ll be able to do through your glasses.

The end of mobile phones? Maybe not. But they definitely represent one of the most likely paths to changing the computing paradigm.

Snapchat Spectacles

I haven’t given much thought to Snapchat Spectacles. They’re cameras that you strap to your head.

And yet Snapchat has at least released glasses – and the kind you might actually want to wear.

As Fast Company reported last year, the point about Spectacles might not be how many are sold, but that Snapchat is learning how to build hardware:

“Right now, companies like Apple and Facebook are holding off on releasing AR glasses because of hardware limitations. Snap made the decision to begin creating head-mounted computers early, albeit with a very limited set of features. So far, the main thing they’ve learned is that people don’t want to wear cameras on their faces. But sales numbers aren’t everything.

“Snap is learning by shipping, and that is a key strategy for them as they build out their platform, and build it out specifically around AR,” wrote Creative Strategies analyst Ben Bajarin in a (paywalled) blog post yesterday.

“While Snap may ultimately not be in the hardware business long term, it is important they continue to build the third-party developer part of the Snap platform and prepare those developers and Snap’s developer tools for the future of head-mounted computers,” Bajarin added.

And maybe the much-vaunted Glasshole effect doesn’t apply to the Snapchat generation. Sure, not many people really want to wear cameras next to their temples. But Snapchat is learning how to fuse fashion with hardware for a generation which isn’t necessarily the sweet spot for companies like Apple.

Snapchat might not be in the ‘AR glasses’ business yet. But they sure are learning a lot: they have the capacity to manufacture and sell hardware, they’re learning from their users, and they’re coming to understand the fusion of fashion and function.

Apple has its own designs, of course, for glasses. But the battle won’t JUST be between Apple and Oakley, but between Apple and Snapchat, Facebook and all the others.

Pillar Two: The AR Cloud

It seems like such an ephemeral thing. You scan an environment and somehow save that scan. Once you do, you can create persistent, multi-user AR experiences.

But in a single presentation, Snapchat made the AR Cloud “real”.

They went from a bunch of “point clouds”:

To explaining the types of experience this creates:

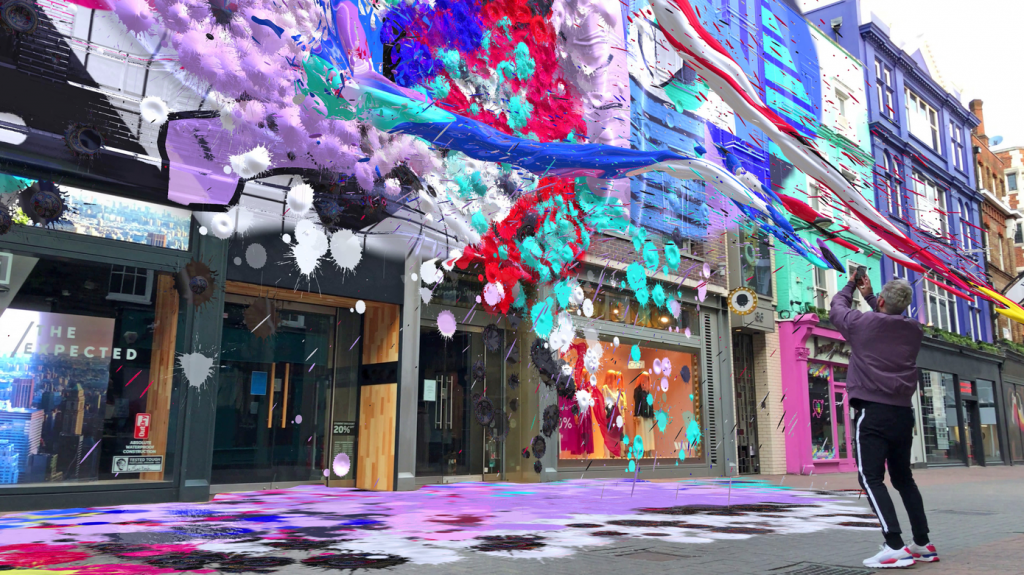

Snapchat Local Lenses is a bit of branding genius. It builds off of the Lenses concept and helps to tie it back to digital twins that have been created from the photographs taken by millions of users:

Snapchat just did Niantic one better. While the creators of Pokemon Go are clearly developing their own AR Cloud (with support from their recent purchase of 6d.ai), they haven’t yet released anything for developers.

Pillar Three: Developer Tools

When you think of AR development you think of ARKit or ARCore, Unity and maybe Unreal thrown in for good measure.

But Snapchat already has an AR developer platform with Lens Studio. And they aren’t shy about calling it an AR platform:

If you think Lens Studio is for creating fun selfies, it’s time to think again. Instead, it’s more like a super user-friendly version of Unity – something that’s accessible to kids, brands and developers alike.

Snap Kit Will Power Apps Other Than Snapchat

I think the reason I’ve overlooked their toolset for AR is because it has been closed off and it doesn’t provide an easy path to monetization. Sure, you can create Snapchat Lenses, but you can’t charge money for them and they exist within Snapchat alone.

But at the Partner Summit, Snapchat revealed its larger ambitions: to provide the AR tools for experiences outside of its own app.

Snap Kit is now extending beyond the walls of the Snapchat app and plans to be the AR toolset for others.

As Protocol reports:

“What Snap is attempting to do with Camera Kit is similar to Google putting a search bar into your browser, Amazon integrating Alexa into your sound system, or YouTube using embed codes to become the internet’s default video player.

The company said it assumes users will come to Snapchat to talk with their friends, but sees many other uses for cameras and AR that will happen outside Snapchat’s walls. Snap wants to power all of it. It wants to be more than an app; it wants to embed itself in the infrastructure of the internet.“

There will be a battle to provide the ‘plumbing’ for the coming wave of AR glasses: to provide the AR Cloud infrastructure and scans and to provide the tools to help create AR experiences.

Snapchat has now made clear that it has designs on both.

Pillar Four: Machine Learning

AR experiences will really start to sing when our devices are more aware of the world around them. When we can semantically map a space, we can start to create experiences where, for example, an AR character knows what a ‘chair’ is.

Unity has taken steps in this direction with MARS: allowing developers to create rules for how digital objects interact with a physical scene.

This week, the CVPR conference is an academic deep dive on these and other topics. At least some of the research includes cool names like Super Glue!

Meanwhile, Snapchat is letting you bring your own machine learning to its platforms. And they introduced a few samples ported in from other developers such as the ability to recognize dog breeds or plant types. Their machine learning ambitions are wider:

“Snap wants developers to bring their own neural net models to their platform to enable a more creative and machine learning-intensive class of Lenses. SnapML allows users to bring in trained models and let users augment their environment, creating visual filters that transform scenes in more sophisticated ways.

The data sets that creators upload to Lens Studio will allow their Lenses to see with a new set of eyes and search out new objects. Snap is partnering with AR startup Wannaby to give developers access to their foot-tracking tech to enable lenses that allow users to try on sneakers virtually. Another partnership with Prisma allows the Lens camera to filter the world in the style of familiar artistic styles.”

Platforms Forward

Snapchat will roll-out some of its new features cautiously and slowly. Local Lenses, for example, will be slowly made available, likely so that they can test their ‘AR Cloud’ in areas with dense point clouds.

Camera Kit, which will power apps outside of Snapchat, also seems like it will have a stepwise roll-out.

But what all of the Snapchat announcements add up to is that it should no longer be thought of as an ‘app company’.

It has put a stake in the ground declaring itself a major player in the future of computing.

As this week’s leading augmented reality company, it is tackling the four major pillars of AR and announcing to the world that it will be a vital part of our mixed reality future.