Unreal Support for Azure Spatial Anchors

Unreal recently announced support for Azure Spatial Anchors with the release of Version 4.25.

At first glance, the announcement might seem like it most neatly ties into their additional support for Microsoft’s Hololens. But Azure Spatial Anchors have wider implications and are helping to realize the AR Cloud.

Spatial Anchors are a method of positioning virtual and physical objects on a ‘digital twin’ of physical space. In a store, for example, anchors can be placed on shelves or along the floor to enable highly precise way-finding.

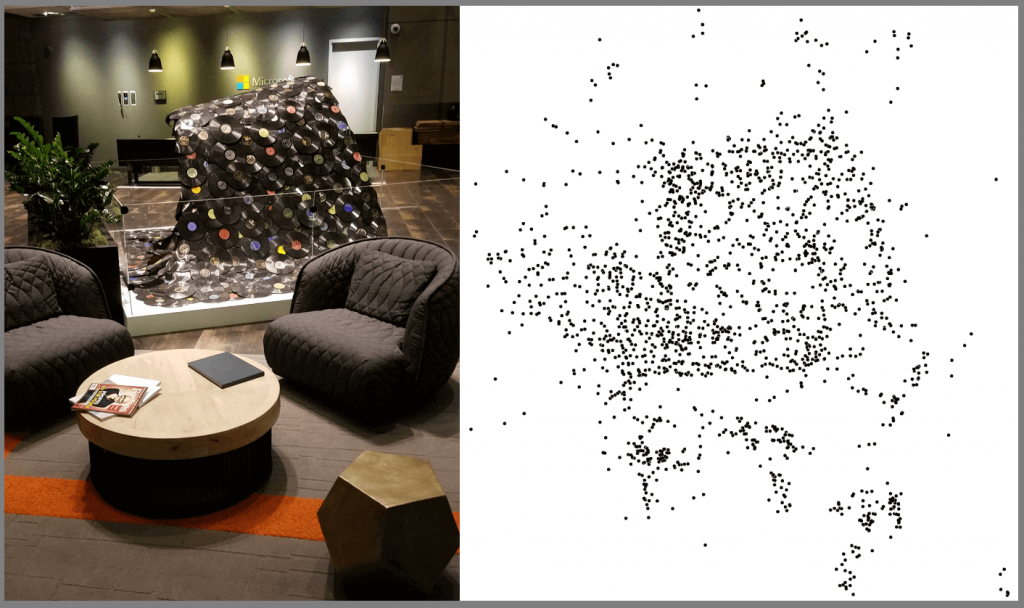

Anchors are created by capturing a point cloud of 3D space. These captures then allow the device that is ‘viewing’ the physical space (a Hololens or an iPhone) to precisely understand where they’re located, and can then ‘attach’ 3D objects.

Providing Augmented Reality Precision

Ever used an augmented reality app where the object seems to float or move unexpectedly? It’s likely using a rough scan of the environment from your phone’s camera to try to figure out where the planes or surfaces are. Your camera can only process so much data in a short time, and it uses object detection to try to figure out what’s a floor and what’s a table.

The increasing capacity of scanners (for example, the LiDAR scanner on the new iPad, and improvements in vision recognition AI) is leading to more precision. as seen in Apple’s recent improvements to ARKit.

However, they often have limited capabilities at a distance. The LiDAR scanner on the iPad is good for 5 metres. Fine for an IKEA app being used in your living room, but hardly enough to map the aisle of a grocery store.

Data In The Cloud

Typical AR experiences are relying on the user to make a map of the world around them. Think of Pokemon GO: you hold your phone up and the camera activates, and you circle around until a Pokemon appears nearby.

Your camera has mapped the world, detected where the ground is, and placed a Pokemon. Hardly a super realistic experience, but the game mechanics are more important in any case.

With spatial anchors, the space has usually been mapped before you got there. Or, you might be mapping it but that map can also be stored.

This allows you to enter a grocery store, say, and get access to the spatial anchors for that location. Now, you don’t need to do the scan yourself, it has been done for you. You’re able to “see” at greater distances – and so the app could show you an overlay that walks you to a specific can of soup.

Unreal and Azure Spatial Anchors

Unreal announced support for Hololens 2 and Azure Spatial Anchors:

Another feature to reach production-readiness with this release is support for HoloLens 2. As well as performance improvements, there’s new support for mixed reality capture from a third-person camera view, the new ability to enable HoloLens remoting from packaged Unreal Engine applications via a command line, and initial support for Azure Spatial Anchors.

These were launched alongside built-in support for the use of LiDAR data (previously available as a plug-in):

For anyone wanting to work with LiDAR data, there’s now built-in support in Unreal Engine for importing, visualizing, editing, and interacting with point clouds. These real-world captures are great for visualizing locations, or for putting newly designed elements in context. The initial version of the plugin was previously made available on MarketPlace; in addition to being included in Unreal Engine 4.25, the latest plugin also offers new support for working with multiple point cloud segments while still maintaining frame performance, an option to delete hidden points, and various other small enhancements.

Unreal Makes It More Real

The launch of support for Azure Spatial Anchors (ASA) shouldn’t be confused with: “which means Hololens”. Because ASA are platform agnostic – it just turns out that the Hololens is a clear beneficiary of the technology.

You can imagine Azure Spatial Anchors being used within the Unreal Engine to create an iPad application – a richly detailed set of holographic overlays to a pre-mapped physical space. (Hey, why don’t you get started?)

Unreal lets us see incredibly detailed models in AR devices. By using holographic streaming, Microsoft showed off how a model with “a staggering 15 million polygons in a physically-based rendering environment with fully dynamic lighting and shadows, multi-layered materials, and volumetric effects.”

Now, these incredibly detailed models can also be ‘anchored’ in physical space. Those anchors can be viewed with precise fidelity in both how and where they appear. And they can be persistent because of the Azure Cloud.

Reality keeps getting more, well, unreal.