Matterport: Visualizing the Augmented Reality Cloud

Matterport lets you scan a 3D space, annotate it and share it with friends. They recently launched support for iPhones and you can download the app and give it a spin. If nothing else it’s a chance to visualize part of the augmented reality cloud – and if you’re a professional (say a real estate agent) you might even use it in your profession.

Previously the company focused on 3rd party capture devices such as the Insta360, Ricoh Theta, and Leica’s LIDAR-based BLK. But they aren’t exactly common amongst consumers. By extending their technology to the iPhone, they’re now bringing 3D capture to a wider audience.

Visualizing the Augmented Reality Cloud

The AR Cloud has several components. Think of it as a digital mirror world: a precise three-dimensional map of physical reality. The ‘cloud’ part means that this mirror world is accessible and pieces of it can be downloaded on demand.

Augmented reality lets us place digital artefacts on physical spaces and objects. It lets you add digital makeup to a video or photo of your face, or to place a digital IKEA couch on a video or photo of your living room.

In order to do that, AR relies on ‘scans’ of your face, or scans of your living room, in order to properly anchor that digital content.

The AR Cloud makes these scans accessible. If I walk into a mall, the AR Cloud would let me get an up-to-date AR-ready map of the space.

Two Foundation Components of The AR Cloud

There are two foundational components to the AR Cloud:

- Reality modelling and mapping

- Spatial positioning

Matterport demonstrates the first of these components. Using your phone, you point your camera at the world around you: your office, say, or a condo you’re selling.

Your phone stitches together pictures of that space. Those photos aren’t just photos: they also use other sensors in your phone to help map the geometry of what’s seen.

Matterport has ‘baked’ its own framework, which it calls Cortex:

The AI engine that powers these breakthroughs is called Cortex, our deep learning neural network that is so advanced that it can accurately predict 3D building geometry and produce a high fidelity digital twin from a standard smartphone camera found in iPhones as far back as the iPhone 6S. Cortex is trained using our tried and true spatial data library for the built world, comprising more than 7 billion square feet of spatial data from 80 countries including almost every type of building imaginable. Credit the Matterport Pro1 and Pro2 cameras for capturing this uncompromising ground truth in spatial data that makes today’s milestone a reality.

The result is that a precise 3D map of a physical space is created, one in which the geometry of individual objects is known.

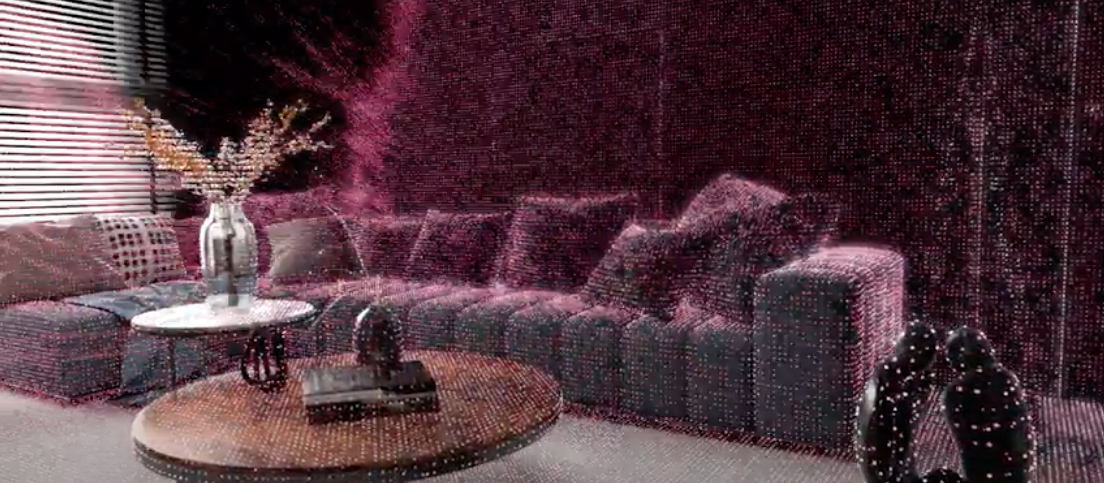

Now, this is important because unlike a simple panorama photo, you can now annotate those individual objects and those annotations will remain properly anchored from whichever angle you view the space:

So what Matterport demonstrates is how a high-resolution scan of a space allows the annotation of individual objects. As the AR Cloud develops, these annotations (which can be manually generated or determined by AI) will create a computer-aware model of physical space, supported by the semantic relationships between those objects.

Modelling + Positioning = Immersion

What Matterport is missing is positioning the ‘viewer’ within that space. Sure, the 3D walkthroughs allow you to stand in a specific part of the space, but what augmented reality promises is the ability to enter that actual room, know exactly where you’re standing, and be able to view those same annotations on your phone or through AR glasses.

Matterport is half way there. They have a cloud of shareable 3D scans. Users are annotating those scans in creative ways (for a real estate listing, say, or as a 3D shop floor).

Next up, if they add the ability to walk through the actual physical spaces and see those same annotations, then they’ve built out a robust version of an AR Cloud.

Think of a real estate agent who scans a condo, puts it online for a virtual walkthrough, but then lets users see those same annotations when they visit the actual home while wearing AR glasses.

These “mini clouds” help us to visualize what it will be like as larger and larger pieces of the physical world are scanned, annotated and made accessible. The mirror world could eventually become as large as reality itself.