What Is The AR Cloud

The AR Cloud will unleash new capabilities by creating a 1:1 map of the physical world. It promises to help turn the physical world into a digital interface and operating system.

It’s perhaps the last frontier between here and ubiquitous computing: information systems present in everything around us. A giant mesh of sensors and connectivity that results in the world itself being the ultimate end point on a world wide web.

These Are The Early Days of Augmented Reality

We’re in the early days of augmented reality (AR).

Many of us have seen digital content blended with the ‘real world’. It’s not uncommon.

If you’ve ever seen a photo taken in Snapchat with puppy dog ears and nose added to the face, you’ve seen AR. If you’ve ever seen how a couch will look in your living room by “placing it” in an app, you’ve seen AR.

These examples take a real-world input (the camera), interpret what is seen (detecting your face) and then add a digital “layer” (puppy dog nose and ears).

But they can often lack precision. That IKEA couch can often seem to float slightly above the floor depending what kind of phone you’re using to view your living room, how good its camera is, and what operating system you’re using.

They usually lack persistence. The couch you’ve placed doesn’t usually “stick” between sessions. If someone else comes into the same room, they won’t ‘see’ where you left it.

And the mediating device (your phone) creates an intrusion between you and your view of the augmented physical space.

Glasses And Mediation

The dreamed-of power of AR will only really become, well, “real”, when the mediating device is less intrusive.

Holding up your phone to play augmented reality Lego or to hold a Pokemon battle means your hand is otherwise occupied. There’s still a phone in between you and the reality that has been augmented.

But if you could see that same scene through AR glasses, your hands would then be free to engage with the scene, and the experience wouldn’t seem so mediated.

And while there are augmented reality glasses today, there’s still distance to travel before you make the mistake of trying to sit on that virtual couch. There are still years of work and hardware limitations that need to be overcome before digital objects look fully ‘real’.

How Your Phone Sees

Until recently, most consumer AR experiences used your phone’s camera to try to detect features of the world around you, and then place digital content on top of those features.

The most robust example is facial mapping. When you go to take a selfie, machine learning (ML) is able to rapidly detect your nose, eyes, lips and other features. In part this is because the ML models have had lots of practice on faces, and it’s a relatively simple “scene” to scan.

They can rapidly determine facial ‘anchor points’ which allows an app to then add those puppy dog noses or to then distort you into a cartoon character.

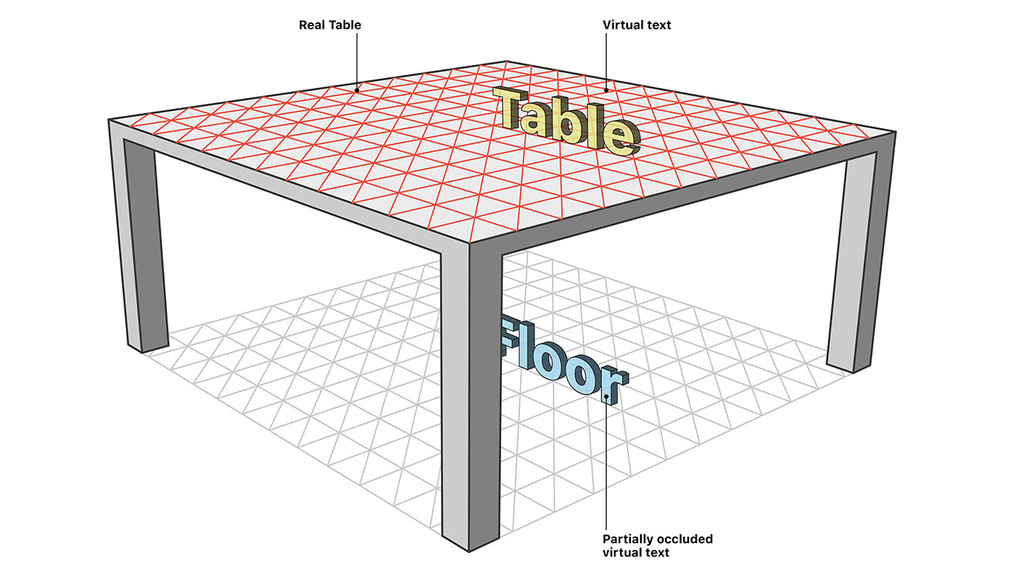

Doing the same thing in your living room is harder. There are a lot of objects in the space. But even if there’s a floor and a table, it isn’t always easy to determine that it’s a table, or to map its edges and planes.

You want to place a virtual dinosaur somewhere, and so usually the best that your phone can do is detect planes. It finds a suitable flat surface and places the dinosaur on that surface.

These capabilities start to improve if the camera is supplemented or replaced by a proper 3D ‘scanner’.

Apple recently introduced a LiDAR scanner on the iPad. The scanner allows the iPad to do more than just detect surfaces and faces, it can create an actual 3D “map” of a space.

It includes the ability to “classify floors, tables, seats, windows, and ceilings” and then to add some realistic physics to the scene. For example, a virtual ball falling from the ceiling can bounce across the surface of the physical table.

This is just one example of how the ability to more precisely map the physical world around you is enhanced by how well you can scan it. Microsoft Hololens and the Magic Leap employ similar sensors but are not yet widely used by consumers.

Reality Modeling and Mapping: Sharing Scans in the AR Cloud

And we arrive at our first example of the AR Cloud. Because it’s fine that your iPad can scan your living room. But what happens when you go to visit a friend’s house? You’ll need to scan their living room also.

What if I could share my scan with you? What if when I arrive at your place, my phone could download a scan in the background so that we could quickly get a multiplayer A/R game up and running with each of us ‘seeing’ the same thing through our own devices?

The first concept of the AR Cloud is the ability to have a shared repository of these scans which would allow us to rapidly access accurate three-dimensional models of physical space.

The first concept of the AR Cloud is the ability to have a shared repository of three-dimensional models of physical space and a semantic mapping of the objects in that space.

Which, when you move out of the living room and into a mall, a factory floor or an airport makes a lot of sense. No one is going to run a scan of their local grocery in order to get precise wayfinding to the soup section. Instead, they want to somehow download a copy of a scan made by someone else.

But a LiDAR scan (as an example) is actually just a rough approximations of the geometry of reality.

Apple might promise that they can detect floors and ceilings, but you’d need to add a bunch of machine learning in order to also detect plants, an empty pizza box, or a dog on the living room floor.

“Scans” become useful when they’re shared. They become TRULY useful when the geometric relationship of the objects in that ‘scan’ are determined, named, and the semantic relationship between them is identified.

They become mind-blowing when they’re updated in real time with the semantic relationship between objects fully mapped.

Geo Pose and Spatial Registry

One of the challenges in using these scans is figuring out how to position the user within that scan.

If I’m using my iPad and its LiDAR scanner to play an AR game in my living room, the app already knows where I am. But if I download a scan, I need to get positioned within that model.

Similar to how GPS was usually only accurate to within 10-15 feet, it needed additional technology to be more accurate.

Similarly, I need to access some additional piece of data in order to figure out whether I’m standing next to the soup or the pasta in the grocery store. Ideally, I know where I am on the ‘floor’ but also precisely at what height, and what direction I’m facing.

Otherwise your digital objects will end up floating around in all the wrong places.

The second concept of the AR Cloud is this geographical positioning. By creating spatial registries, I can quickly position myself within a space, ideally in 6 dimensions.

The second concept of the AR Cloud is creating a ‘spatial registry’ so that I can quickly find out where I am in a physical space.

I can tell that I’m close to a kiosk in a mall. By querying the AR cloud, I can find out where that kiosk is in relation to the spatial model.

In addition, I’ll need to tap in to something other than WiFi to make sense of where I am. Bluetooth LE (beacons), WifI, 5G, ultra-wideband (UWB) and other technologies might all play a role.

And Everything Else

These two features: an accurate three-dimensional map of the physical world (including semantic mapping of the relationship between objects), and the ability to query data to find out exactly where I (and other objects) are located within that map are the core components of the AR Cloud.

It’s like Google Maps on steroids (and Google, of course, is working to create its own AR Cloud).

But the power of the AR Cloud will be unleashed by all of the systems that get ‘attached’ to it.

Privacy, property, data sharing, ownership, identity, artificial intelligence: all of these are associated systems that can take these shared, highly accurate “maps” and turn them into user experiences.

(I would argue that some of these systems should be “baked in” to the core models.)

Any combination of these systems will create new value in ways that we haven’t yet seen, comparable to how mobile computing changed how we could create and access value on the “web”.

We might start with an AR cloud that contains a few ‘maps’ for your local hospital or mall. But as it extends to encompass everything, from streets to parks to entire cities, it will change the paradigm for how we interact not just with technology, but with the physical world itself.