Apple Glasses Could Take Photos Without a Camera

Apple Glasses could take photos. But they might not have a camera.

The ‘design brief’ for the new wearable device ISN’T to create augmented reality glasses, but to a) disrupt EYE GLASSES (a $138B market) and b) simply create a pair of glasses that are beautiful and can do beautiful things.

Being able to “take photos” using your new glasses could be one of the compelling use case in the absence of rich 3D “scenes” in your living room or on the street. And when combined with in-glass “world filters” it could bring some “pop” to reality.

It’s an extension of the concept behind Snapchat Spectacles. But without having these giant cameras floating on your glasses – in fact, without any cameras at all.

Leveraging 3D Capabilities

One of the toughest challenges in augmented reality is adding three-dimensional “objects” to the physical world. The tools to make this happen have gotten better. The AR Cloud will help to make it happen at global scale.

To simplify the challenge, when you add, say, a digital couch to your living room, it will look “right” when:

- Your device can recognize other objects. It knows that there’s a coffee table, for example. This is important because you want the couch to sit BEHIND the coffee table. Or, if someone walks in front of your digital couch their body should block the view

- Your couch is properly lit. Ideally, your device can detect something about the lighting of your living room, and your digital couch is properly adjusted.

ARCore (Google) and ARKit (Apple) are the basic frameworks for developers to create 3D scenes. They’ve both gotten better at object detection and occlusion (hiding and showing objects based on what they’re in front of or behind).

Apple has power-boosted this ability further with the launch of LiDAR support for iPad (and coming soon to iPhone).

LiDAR takes an accurate “scan” of a physical space. This allows a device to know, with greater fidelity, what objects are in the space, how far they are from each other, and which object is in front of the other.

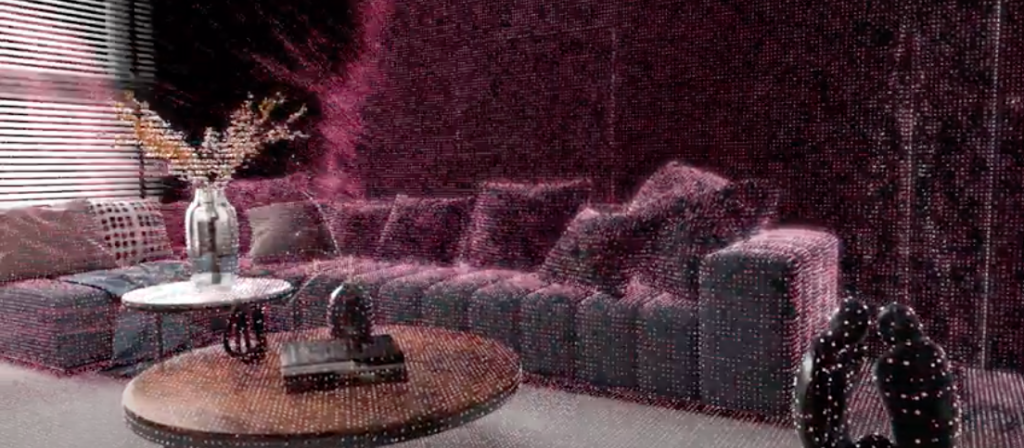

Matterport stitches photos together to do this ‘scan’ but the result is the same: a 3D version of physical space that you can ‘walk around in’. It’s one way to visualize what it means to ‘scan’ a room.

With these combined capabilities IKEA can now “digitally place” a couch in your living room and it can look reasonably realistic. It doesn’t “float” in space like many AR objects once did. It can be persistent because of spatial anchors and the AR Cloud. And it has a higher degree of occlusion, allowing it to hide objects behind it or be hidden when you walk in front.

Apple Glasses: Immersion Next

But bringing these capabilities to a pair of glasses has a tradeoff: you need to pack a lot of power, sensors and technology into something you’re supposed to wear comfortably on your face.

For Apple, these tradeoffs might be too high: they want to make something beautiful. Sure, the hardware will get there, but it will take some time.

Magic Leap, on the other hand, wanted immersion FIRST. Their bet was that if you could be fooled into believing there was a whale in your living room because that whale looked REAL, then you’d be willing to make the tradeoff and wouldn’t mind wearing something with hints of steampunk.

So if we start with the premise that Apple Glasses may be used to help “pop” reality, while tackling these tougher challenges of projecting 3D scenes into your vision for later, what types of experiences can they create in the short-term?

Apple Glasses Pop Reality

One of my concepts is for prescription-ready glasses with ‘progressive lenses’ that go further than lightening or darkening based on time of day. Instead, they’d be able to fully synchronize with your other screens.

For example, “night mode” on your laptop could be achieved by a combination of adjustments to your Apple Glasses along with adjustments to your screen.

But these progressive lenses could also embed the equivalent of photo filters for reality. Think of an Instagram filter. Now, apply that same filter to everything you see.

Cloudy outside? Boost your vision with a bit of brightness, a bit more red. They would be colour LUTs for your eyes.

But wouldn’t it be great if you could take a photo of what you see?

Cameras Are Creepy

Facebook is promising us AR glasses that will use cameras to “produce multi-layer representations of the world using crowdsourced data, traditional maps, and footage captured through phones and augmented reality glasses”.

Footage captured through glasses? How will you feel when your friend shows up for dinner wearing Facebook AR glasses whose cameras are always on?

We risk a repeat of the famous Glasshole effect. Basically: cameras in your glasses can be creepy.

Maybe we’ll get there. Maybe one day we’ll appreciate that nothing is invisible to all of the cameras and sensors and, I dunno, flying cars.

Until then, I don’t expect Apple (or many others) to include cameras in their ‘AR glasses’.

Combining Phone and Glasses

But maybe you CAN still take photos. I want to be able to capture what I see. I just don’t want to have a camera built into my glasses because it’s just too creepy.

But holding up your iPhone to take a photo is common. And my iPhone now has a LiDAR scanner, as noted above.

And so what if you could hold up your phone to take a photo, but the photo would be adjusted to match what you see through your Apple Glasses? And not just adjusting it “up or down” to match that your phone is being held near your chest, but adjusted for angle and rotation as well?

In theory, you could hold your phone at any angle, and LiDAR scanning data could be combined with the data from your Apple Glasses (position, rotation, etc) to adjust the photo to match what you see.

A hint of how this would work can be seen in the Intel Realsense cameras. These are cameras that do a lot of what LiDAR and cameras do on a new Apple iPad:

The stream captured by the camera can be adjusted in real-time. In this example from a GitHub repository, the feed from a RealSense camera is piped through a Unity Visual Effect Graph:

These types of live AR tracking cameras are increasingly used in Hollywood – and in games.

So now imagine those same effects being based on the ‘settings’ of your Apple Glasses (filter choices) and the position of your head (rotation, height, etc).

Now, you have a photo that is a pretty accurate “capture” of what you see through your eyes, which matches the ‘progressive reality’ filters you’ve selected for your Apple Glasses view, all while you’re not doing much more than casually holding your phone at waist level.

The idea leverages all of the 3D and camera capabilities of your phone, removes the ‘creepiness’ factor (people can still see you’re using your phone), and leverages the capacities of your Apple Glasses and how you’re seeing the world.

Apple could launch the equivalent of Snapchat Spectacles, but without the need to pop those cameras on the glasses themselves.